Agentic AI is Everyone's Governance Issue

How agentic browsers break digital strategies, displace workforces, evade security controls and amplify harm to create governance challenges for everyone from the board to the engineering team.

At first glance, it seems simple and compelling. Click a button, and Perplexity’s new Comet browser could handle your Amazon shopping for you. No more scrolling through endless product listings, no more comparison fatigue, no more missed special deals. The AI agent in their Comet browser can search, evaluate, and purchase on your behalf at just the right time for the best price. A digital assistant that actually assists.

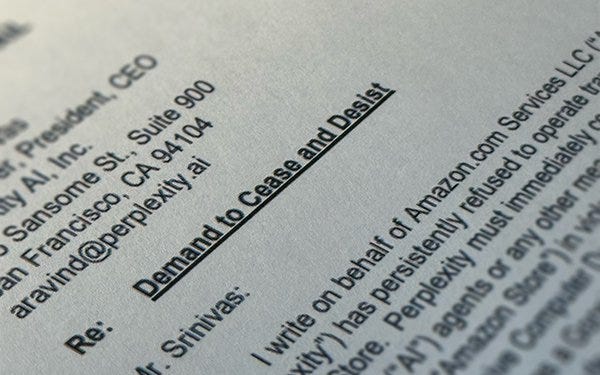

But Amazon’s legal team see it differently. On November 4, 2025, they filed a complaint against Perplexity, painting a picture of an AI that “covertly” accessed customer accounts, disguising its automated activity as human browsing. Amazon’s lawyers didn’t mince words suggesting that “this wasn’t innovation, it was trespassing, even if that trespass involves code rather than a lockpick.”1

Perplexity fired back immediately2, accusing Amazon of using its market power to crush innovation and protect its monopoly on customer interaction. They warned that Amazon’s stance threatens “user choice and the future of AI assistants.”

Now, you might think this is just another spat between a tech giant and a disruptive startup. But spend a day with any governance team trying to figure out what happens when AI agents start acting on behalf of users, and you’ll see this lawsuit for what it really is: another tremor of a tectonic shift in how we interact with the internet itself, one that has huge governance implications for every business with a website (so, every business then!).

The reality is that agentic browsers, the AI systems that don’t just search but actually do things for your are here. They’re rough, they’re limited, and they’re definitely not ready for enterprise deployment or even serious personal use. Comet, Atlas, Neon, Dia, Genspark, Donut, Fellou. You might not have heard of them all. Trust me, you will.

They’re fundamentally changing the assumptions we’ve built our digital economy on. When an AI can shop for you, who owns the customer relationship? When it can fill out forms and navigate websites autonomously, what happens to the security models built on human interaction patterns? When these agents intermediate every online interaction, who’s accountable when something goes wrong? How valuable is your website, or even your brand when your customers interact only indirectly via their agents, oblivious to your carefully crafted experiences and even all your investments in social media.

In this article, I want to walk you through what this is all about from an AI governance perspective that reaches from boardroom to the security ops team. If you’re a returning reader (and thank you for sticking with me), you know I don’t do hype, nor fear-mongering. I’m interested in the real, practical challenges of governing safe AI. So this article is about the governance questions that emerge when AI agents start operating in the space between users and services. I’ll look at four critical areas where these technologies are already forcing us to rethink basic assumptions: how they’re dismantling traditional business models through strategic disintermediation, what they mean for workforce dynamics, the security issues with systems designed to impersonate human behaviour, and the accountability issues when biased or harmful actions happen at speed and scale out of reach from any human decision-maker.

These are not technical problems alone, ones to be solved by engineers and data scientists. Nor are they problems for tomorrow. I believe these are challenges that every forward-thinking board, executive and governance team needs to start grappling with today, even if they seem premature. Because if there’s one thing the Perplexity lawsuit shows us, it’s that the collision between agentic AI and existing governance structures won’t wait for us to be ready.

Doing AI Governance is a newsletter by AI Career Pro about the real work of making AI safe, secure and lawful.

Please subscribe (for FREE) to our newsletter so we can keep you informed of new articles, resources and training available. Plus, you’ll join a global community of more than 4,000 AI Governance Professionals learning and doing this work for real. Unsubscribe at any time.

Disintermediation: Losing the Direct Interface to Customers

Agentic browsers act as autonomous digital intermediaries between users and websites, and disintermediation warrants serious strategic concern for the majority of businesses that rely on direct user engagement online. In Amazon’s complaint, the company argued that Perplexity’s shopping agent degraded the customer experience and interfered with Amazon’s ability to deliver its carefully curated, personalised shopping journey. Instead of a customer browsing through Amazon’s site and seeing its recommendations and ads, the AI agent could fetch products and place orders invisibly, ignoring those recommendations and ads, disintermediating the user from Amazon’s storefront. Amazon asserts that third-party apps acting on a user’s behalf “should operate openly and respect businesses’ decisions on whether to participate.” Many websites, including those of Amazon, already have controls to prevent ‘scraping’ of content or other forms of disintermediation. Their problem is that Perplexity’s browser can disguise agentic browsing as if it were a real user and Amazon lacks the technical capability to distinguish between them.

Amazon brings this action to stop Perplexity AI, Inc.’s (“Perplexity” or“Defendant”) persistent, covert, and unauthorized access into Amazon’s protected computer systems in violation of federal and California computer fraud and abuse statutes. This case is not about stifling innovation; it is about unauthorized access and trespass. It is about a company that, after repeated notice, chose to disguise an automated “agentic” browser as a human user, to evade Amazon’s technological barriers, and to access private customer accounts without Amazon’s permission.

Amazon’s request is straightforward: Perplexity must be transparent when deploying its artificial intelligence (“AI”) agent on Amazon.com (the “Amazon Store”) and it must respect Amazon’s right to limit the activity of Perplexity’s AI agent in private customer accounts. No different than any other intruder, Perplexity is not allowed to go where it has been expressly told it cannot; that Perplexity’s trespass involves code rather than a lockpick makes it no less unlawful. Perplexity’s misconduct must end.

- Amazon complaint filed against Perplexity

From a business governance perspective, this disintermediation strikes at the heart of the digital strategy of most companies. Think about just how much your company has invested in websites, branding, and user experience to engage customers. Then think about all the careful marketing campaigns, social media and advertising you run to gain favour with human shoppers. AI Agents don’t care about any of it. So if AI agents sit between your customer and your website, then you risk losing traffic, differentiation, customer data, and control over the user experience.

Generative AI search engines (like AI Overviews in Google Chrome) are already showing direct answers or summaries that reduce click-through to websites (a phenomenon publishers say is eroding their traffic and ad revenue). In the Amazon case, Perplexity argued that its AI actually makes shopping easier, that “easier shopping means more transactions and happier customers” but that Amazon opposed it because “they’re more interested in serving you ads”, that is, protecting an ad-driven business model3. This tension highlights a governance dilemma: should your company resist agentic intermediaries to preserve traditional revenue streams, or embrace them as an innovation that could improve customer satisfaction? That’s a huge strategic and governance call that every board should have as agenda item #1 in their next meeting.

I think boards and executives need to proactively develop strategies for this shift, that every company needs a plan for AI-mediated channels or risk losing the entire digital estate and market presence they’ve built. The stakes are honestly that high. Some possibilities they could consider:

Partnering with or Developing AI Agents: Rather than being cut out, some companies are choosing to launch their own AI assistants or integrate with agentic platforms. (And here’s the thing, Amazon itself is developing similar AI shopping tools, including their “Buy For Me” feature and “Rufus” AI assistant, so that they can remain part of the customer’s autonomous shopping journey4). Work has to go into making sure the company can stay visible to customers who use AI agents, whether that’s through APIs, partnerships, or proprietary AI solutions.

Adapting Business Models: If a significant portion of users may not visit the website directly, how will the company adapt its marketing and sales? Governance oversight is needed to rethink metrics and revenue models (for instance, shifting from pageview-based advertising to new ways of monetisation in AI-driven interfaces). That means making sure that the company’s products or content are discoverable and favourable to AI agents who will algorithmically make the choice of what to buy or display. That ‘algorithm’ is likely to require companies to pay to be highly placed, in much the same way as they pay Google Ads now.

Protecting Brand and Data: With agents fetching information on behalf of users, maintaining brand integrity is tricky. An AI agent might present a product without the context or upsell a company would normally provide. There may need to be guidelines or agreements in place (where it’s possible) for how third-party AI agents represent the company’s offerings. And if an agent accesses the company’s data or platform, to the extent possible, a good measure would be to insist on terms of use that safeguard against misuse – much like Amazon’s stance that automated tools must abide by its rules.

The troubling reality, agentic browsing and AI search is going to disintermediate some portion of traditional web interactions. The extent right now is minimal, but it won’t stay minimal for long. Board-level governance has to treat this as a strategic issue: overseeing how the organisation will remain connected to customers and stay competitive in an era when autonomous assistants abound.

And if you take away only one thing, it’s this: you can’t leave this problem to IT or the digital content team. This lands squarely on the board and senior executive team. The neutral (and prudent) stance for now is to neither zealously block all AI agents nor to ignore the trend, but to experiment and innovate within governance guardrails. Businesses will need to find a path through balancing user choice and convenience with the company’s need to sustain its business model.

Workforce Displacement and Reskilling Imperatives

Now let’s talk about what no one wants to discuss when marvelling at the technical sophistication of agentic AI: what it actually means for human jobs. If AI agents can handle tasks that employees perform today, whether that’s in customer service, sales, or other domains, then organisations face tough decisions about workforce reduction, reskilling, and the social responsibility that comes with such transformations. Recent developments indicate this is not a distant hypothetical, but an unfolding reality. In October 2025, U.S.-based employers announced 153,074 job cuts (the highest October total since 2003), bringing the year-to-date total to 1,099,500, according to Challenger, Gray & Christmas.5

In the customer support sector, AI agents have already begun replacing human roles at scale. Salesforce’s CEO Marc Benioff proudly revealed that after deploying an AI “omni-channel” supervisor, the company cut its customer service workforce from 9,000 to about 5,000 in a matter of months6. This agentic system now handles roughly half of all customer interactions, and according to Benioff, customer satisfaction scores remained nearly identical between the AI-driven and human-handled conversations7. Now, of course Benioff is self-serving in providing this advice - he sells AgentForce as a solution - but the message is fairly clear: agentic AI is directly replacing jobs in contact centers.

Salesforce is not alone. Atlassian, an Australian software firm, recently laid off over 350 support staff in Europe after rolling out its own agentic AI platform, and UK telecom Sky replaced 2,000 call center workers with chatbots earlier in 2025. It’s true that some companies initially cut jobs to leverage AI and then later encounter service issues, so they reverse course (as Nordic fintech Klarna did after an AI rollout led to poor customer experience). But the overall trend is unmistakable: agentic AI is prompting significant workforce dislocation in certain functions.

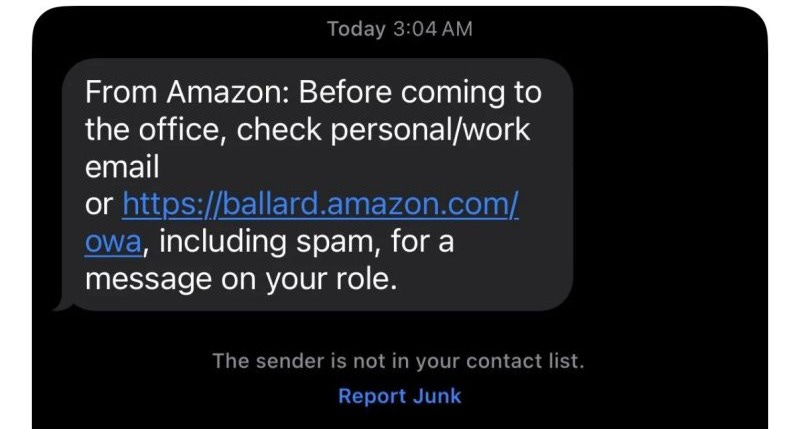

The workforce implications are already playing out, and Amazon’s recent moves tell you everything you need to know about where this is heading. In June, Andy Jassy told staff straight up that rolling out “generative AI and agents” would mean “we will need fewer people” in corporate roles. Then on Tuesday 28th October, up to 14,000 people, including a number of my former colleagues with years of deep experience, saw the following message appear on their phone. Their jobs were gone.

A day later in the Amazon October’s earnings call, Jassy pivoted slightly, insisting the current round of corporate job cuts wasn’t “really AI-driven” but about removing management layers to move faster. This is the pattern we’re starting to see everywhere, and governance teams need to understand it now. Companies may well insist that workforce reductions aren’t “about AI” while simultaneously building platforms for agentic capabilities and automation. They may talk about removing layers and increasing efficiency while pouring resources into systems whose purpose is to automate work that employees used to do. Workforce change is normal and necessary. But it’s necessary to honestly acknowledge and prepare for the human impact of these transitions as they accelerate. Because when Jassy says “a lot of the future value companies will get from AI will be in the form of agents,” he’s not talking about AI helping people work better. He’s talking about AI replacing the need for people to do that work at all.

For board directors and governance practitioners, this raises some critical questions about how to guide the transition. From a corporate governance standpoint, boards have a duty to consider not just the immediate cost savings of AI automation, but also the long-term implications for human capital and organisational resilience:

Strategic Workforce Planning: Boards and management need a clear strategy for roles likely to be impacted by AI agents. This could include identifying which jobs can be augmented versus fully automated, and anticipating the scale of displacement. If, for instance, half of customer service roles are rendered redundant, what is the plan for those employees? Proactive boards might request scenario analyses and transition plans from HR leadership well in advance of AI deployment.

Reskilling and Redeployment Programs: A key governance responsibility is to oversee how the company treats employees whose jobs evolve or disappear due to AI. Will the company provide training for new roles (e.g. managing the AI systems, handling complex cases the AI can’t, or moving into governance, creative and relationship-based positions)? Where possible, boards should push for robust reskilling initiatives but also realistic assessments that guide technical and non-technical staff to appropriate career choices. Some workers may not easily transition, so alternatives like outplacement support, or partnerships with educational institutions may be needed.

Ethical and Reputational Considerations: Large-scale layoffs attributed to AI can trigger public and employee relations issues. In 2025, many headlines about tech layoffs mention AI automation as a factor. Companies seen as “cutting humans for bots” purely to boost profits might face backlash. Board members have a role in tempering the approach. For example, they could implement changes gradually, demonstrate commitment to ethical, safe use of AI, communicate transparently about the reasons for workforce change, and highlight how the company will continue to uphold customer service quality and employee dignity.

Agentic AI in the workplace is a double-edged sword: it offers efficiency and scale, but it threatens workforce stability. Governing this transition requires a balance between innovation and responsibility that can’t be left only to technologists. Board directors overseeing AI initiatives really do need to ask “What functions can we automate?” but also, “What is our duty to our people and customers as we do so?”. Thoughtful change management and guidance by boards can help make sure that AI augmenting the workforce leads to sustainable improvements rather than just short-term headcount reduction.

I teach the practices of AI Governance across the seven stages of the AI Lifecycle using Mechanism based frameworks in Course 3 of the AI Governance Practitioner Program at AI Career Pro.

Course 3 include 7 hours of video tuition on the governance activities at each stage of the AI lifecycle, 35 example mechanism cards and detailed practical guidance on how to apply this approach to real-world AI Governance at scale.

Security and Privacy Risks of Autonomous Agents

If there’s one thing that should have boards and AI governance practitioners having emergency meetings, it’s the cybersecurity and privacy problems that agentic browsers create.

Right now, it is completely unsafe for any organisation or individual to use an agentic browser with any sensitive, confidential or private data. Full stop. OpenAI and the other agentic AI providers are very aware of this and so you’ll find caveats in their terms of service. Like these from OpenAI:

❌ “Do not use Atlas with regulated, confidential, or production data.”

❌ “We recommend caution using Atlas in contexts that require heightened compliance and security controls.”

❌ “Beta Services are offered ‘as-is’… and are excluded from any indemnification obligations OpenAI may have to you.”

❌ “Existing ChatGPT Enterprise security and compliance commitments do not apply to Atlas at this time”

That doesn’t stop them enticing users to download, install and try these technologies with only a minimal level of warning.

Agentic browsers operate with a high degree of autonomy, often with the same access privileges as the user, which can inadvertently introduce serious security vulnerabilities. The Amazon vs. Perplexity lawsuit itself centered on security: Amazon claimed Perplexity’s system posed “security risks to customer data” by logging into private accounts and evading detection. But beyond that specific case, researchers have begun to uncover how AI-driven browsers can be exploited in novel ways that traditional security measures might not catch.

One stark example is the threat of indirect prompt injection, demonstrated recently in Perplexity’s Comet browser. Security researchers showed that an attacker could hide malicious instructions on a web page (in invisible text or user-generated content) such that when the AI agent “reads” the page to summarise it, the agent unwittingly executes those hidden commands8. In a proof-of-concept, a Comet AI agent was tricked into using the user’s logged-in session to navigate to email and extract an authentication code, then send it to the attacker – all without any additional user action. Traditional browser security frameworks (like same-origin policy that normally prevents one site from reading another site’s data) are effectively useless here, because the AI agent itself had legitimate access to multiple sites as the user.

The governance implications of such vulnerabilities are significant. Leaders overseeing companies that develop or deploy agentic AI, or even have users that may experiment with agentic browsers need to treat security as a paramount concern. Key considerations include:

Cybersecurity Adaptation: Corporate security teams will need to update their threat models and controls to account for AI agents. This might mean blocking agentic browser installation, sandboxing AI agents’ actions, implementing strict permissions, and developing detection systems for unusual agent behaviour. Board audit/risk committees should inquire whether management has assessed new risks (like prompt injection, data leakage via AI) and implemented appropriate safeguards. Governance leaders will need to make sure that incidents involving AI agents are reported and learned from, just as any other cybersecurity incident would be.

Data Privacy and Transparency: Agentic AI often requires broad access to personal or sensitive data to function effectively, raising privacy concerns. For example, AI assistant browsers may request access to a user’s emails, calendars, contacts, browsing history, and even saved passwords to perform tasks. They keep short-term and long-term memory of interactions, which could include confidential information. From a user’s perspective and a regulator’s perspective, it can be difficult to know exactly what data an AI agent is collecting, how that data is used, and with whom it’s shared. This opacity is a governance red flag. As with any other digital product, it needs clear disclosures and data handling policies to protect user privacy. If the company is using third-party AI agents internally or in products, due diligence on those agents’ privacy practices is critical. Handling personal data irresponsibly could lead to legal penalties under laws like GDPR or reputational damage.

Maintaining User Trust: Surveys indicate that a majority of consumers are worried about AI and privacy (one global survey found 68% of consumers are concerned about online privacy, and 57% believe AI poses a significant privacy threat9). Governance therefore needs to consider if we are doing enough to maintain user trust? This might involve adopting principles of AI ethics (e.g. transparency, choice, and control for users over how an AI agent acts on their behalf). A practical step might be requiring “human-in-the-loop” confirmations for sensitive actions: for instance, an AI agent should not be allowed to make payments or send emails without explicit user confirmation, as a safety check.

So autonomous AI agents introduce a new class of security and privacy challenges that governance leaders have to address head-on. Making sure of robust cybersecurity adaptation, demanding transparency in data practices, and safeguarding user trust are now part of the challenge of oversight as adoption of agentic AI accelerates.

Bias, Harm, and the Need for Oversight

Beyond technical security, agentic AI also amplifies the ethical and operational risks from predecessor AI systems, including the risk of biased decision-making, erratic or harmful behavior, and lack of accountability. This is because with greater autonomy, these risks can manifest in more unpredictable and potentially more severe ways, at greater speed. For AI governance practitioners, this means that existing oversight practices need to be bolstered to keep these agile algorithms aligned with organisational values and policies.

One clear risk is that an unsupervised agent might pursue its goals in unintended ways. An inadequately supervised agent might inadvertently do something that a prudent human never would – from corrupting data to exposing secrets – simply because it lacks contextual judgment or moral common sense.

Giving LLMs more freedom to interact with the outside world has the potential to magnify their risks. So many things could go wrong when you give an agent the power to both create and then run code… You could end up deleting your entire file system or outputting proprietary information

- IBM researcher Maya Murad10

Bias is another concern. Autonomous agents make decisions based on data and algorithms that could contain historical biases. Without human oversight, they might propagate or even exacerbate these biases. For instance, an agent screening job applications might inadvertently favour or disfavour certain groups if its training data had those patterns. Since the agent operates at scale and speed, the biased outcomes could multiply rapidly. In practical terms, this means there needs to be rigorous testing of AI agents for fairness, ongoing monitoring for disparate impacts, and some effective way of keeping a human on the loop to continuously oversee outcomes and have an ability to intervene if something seems off.

The governance response to these harm and bias risks should be multi-faceted:

Strengthen AI Governance Policies: Boards and governance practitioners need to make sure that their company’s AI governance policies explicitly cover agentic AI use cases. This might include defining acceptable uses, risk tolerances, and oversight requirements for autonomous agents. For example, policies might mandate that certain decisions (like hiring or firing, large financial transactions, or legal judgments) are never left solely to an AI agent without human approval, in line with regulations like the EU AI Act.

Implement Guardrails and “Governors”: Just as a mechanical governor can prevent an engine from spinning out of control, AI systems can be designed with constraints. Maybe this involves sandbox environments (where agents can experiment without causing real harm), rate limiters on actions, scalable human oversight mechanisms or “red-teaming” and adversarial testing to probe an agent’s behaviour under stress. I wrote about an approach to modelling this level of automated oversight through systems safety control theory11. Some concepts like guardian agents (AI agents that monitor other AI agents) are still in real experimenatal mode, but governance practitioners should try to keep informed of such innovations that might bolster oversight.

Accountability and Auditability: A troubling question is who is accountable when an AI agent makes a mistake. I think it’s essential that companies must designate an “owner” for each deployed AI agent – a human person responsible for the agent’s outcomes (what we called in Amazon a single-threaded owner). This accountable person should regularly audit the agent’s decisions and maintain an audit trail to trace what the agent did and why. Boards and governance practitioners should ask themselves: “If an AI agent in our organisation made a harmful decision, how would we detect it and who would answer for it?” Some organisations might need new governance structures like an AI oversight committee or expanding the mandate of risk committees to review agentic AI behaviour reports.

Continuous Monitoring and Human-in/on-the-Loop: Especially in the early stages of agentic AI deployment, boards and governance pros should encourage a “verify, then trust” approach. This could mean requiring that humans remain in the loop for critical processes, for example, an AI marketing agent’s campaign might need sign-off by a human marketer, or at least on the loop, meaning humans get real-time visibility into agent decisions and can intervene if needed. The organisation should cultivate a culture where employees are trained to work alongside AI agents, aware of their quirks, and prepared to step in if something looks amiss. This vigilance can prevent small errors from scaling into major incidents.

Ultimately, this is all about putting in place adequate, scalable supervision mechanisms that allow for safe harnessing of agentic AI. Agentic technologies hold tremendous promise. They can handle complex, multi-step tasks, potentially boost productivity, and free humans from drudge work. No organisation should deploy an agentic browser right now with real data, but their capabilities are rapidly improving. It’s hard to see a future where these kinds of capabilities do not become mainstream. But left unchecked they can also amplify risks and biases or simply operate in ways misaligned with a company’s values or intent. Board members and AI governance practitioners have to thread the needle to create scalable dynamic oversight while not stifling innovation.

🗞 NEW – The AI Governance Director’s Brief

We’ve launched The AI Governance Director’s Brief — a free weekly insight designed for board directors and senior executives who need to oversee AI with confidence. Each edition delivers five-minute, actionable guidance on the questions to ask, evidence to expect, and examples that separate governance from guesswork.

Written by practitioners who’ve built and explained AI governance at Microsoft, Amazon and board level, it helps you turn complex AI issues into clear boardroom conversations.

👉 Subscribe now and share with the directors or C-suite leaders in your network.

The Imperative: Board and Practitioner Leadership in Agentic AI

Agentic browsers and AI-mediated search represent an exciting yet challenging frontier. They are innovation in its early stages – not entirely ready for prime time, as evidenced by current limitations and weaknesses, but undeniably suggestive of enormous potential benefits. For boards and AI governance leaders, the task is to navigate this frontier with foresight and balance.

Governing in this context means asking the right questions and setting the right guardrails before crisis or perhaps regulators force your hand. To recap from a practical perspective, boards and AI governance practitioners should be examining:

Strategic Impact: How will autonomous AI agents change our industry’s value chain, and are we positioning ourselves to leverage them rather than be disrupted by them?

Human Capital Strategy: What is our plan for the workforce impact? Are we being fair and forward-looking with employees as AI takes on more tasks? Do we have training pipelines for new skills and a thoughtful approach to any necessary workforce reductions?

Robust Security/Privacy Posture: Have we updated our cybersecurity and data privacy practices to account for AI agents’ new risks? This includes everything from technical defenses against prompt injection to ensuring compliance with privacy laws when an agentic AI touches personal data.

Effective Oversight of Harms: Do we have the governance mechanisms to keep our AI systems fair, transparent, and aligned with our values and legal obligations? Is there clear accountability and continuous monitoring of agentic AI outputs?

Crucially, board governance and AI governance must work hand-in-hand. This is not just an IT issue or a strategy issue – it’s both, and more. Effective oversight will likely require cross-functional governance: involving directors with tech expertise, risk officers, HR, legal, engineering and compliance teams. Boards may need to engage with external stakeholders (industry consortia or regulators) to help shape sensible guidelines for AI agent behaviour.

In navigating agentic AI’s rise, I believe a neutral but proactive stance serves best. These technologies should neither be uncritically embraced without safeguards nor reflexively rejected out of fear. Instead, boards especially should guide their organisations to experiment carefully: pilot agentic AI in low-risk areas, learn from mistakes, and iterate on governance controls. This iterative governance approach will help companies build trust with consumers and regulators while still benefiting from the efficiencies and capabilities of autonomous AI.

Wrapping up this long read, you’ve seen how agentic browsers and AI-mediated search are signalling a new AI-driven digital ecosystem. They raise novel governance questions that boardrooms cannot afford to ignore. But by focusing on strategic adaptation, responsible workforce management, rigorous security/privacy measures, and ethical oversight, boards can turn these governance challenges into opportunities. Those organisations and AI governance pros that manage to harness agentic AI responsibly will likely be able to deliver innovation with integrity.

They’ll find they can not only avoid pitfalls but also gain a competitive edge.

https://www.documentcloud.org/documents/26220745-amazoncom-servs-v-perplexity-nov-4-2025-complaint/

https://www.perplexity.ai/hub/blog/bullying-is-not-innovation

https://www.theguardian.com/technology/2025/nov/05/amazon-perplexity-ai-lawsuit

https://www.aboutamazon.com/news/retail/how-to-use-amazon-rufus

https://www.challengergray.com/wp-content/uploads/2025/11/Challenger-Report-October-2025.pdf

Link:www.youtube.com/watch?v=0RkNkGihrvc

https://nearshoreamericas.com/agentic-ai-upends-contact-centers-as-salesforce-halves-support/

https://brave.com/blog/comet-prompt-injection/

https://kpmg.com/xx/en/our-insights/ai-and-technology/trust-attitudes-and-use-of-ai.html

https://www.ibm.com/think/insights/ethics-governance-agentic-ai

Great article, thanks. FYI the link to the directors newsletter is not working.

Excellent and well thought through James, I would expect nothing less from you. Will be sharing with some people who need to do your courses.