From good intentions to real AI Governance

How the concepts of adaptive mechanisms at the core of Amazon's success can transform your approach to AI Governance from good intent to real impact.

HireVue. Interview better, hire smarter. That was the slogan of a company that shows just how hard it can be to translate good intent into real AI governance.

Starting as a video-interview company in 2010, at one point even shipping webcams to candidates, HireVue spent the mid-2010s grafting AI onto recorded interviews. By 2014 it was marketing assessments that analysed what candidates said, how they said it, along with their facial expressions. They used those smiles and moments of hesitation to infer job-related traits - do you appear too meek to be a business leader, too casual to be a dependable worker? It was an intriguing idea, but not one backed by any real science. Yet by 2017, they were rocketing to success. Their customers valued an inexpensive way to interview and narrow candidate selection, and then confidently make hiring decisions at least in part based on the subtle clues concealed in facial expressions.

Their scale was significant. By late 2019, coverage in the Washington Post1 suggested more than a million candidates had been assessed with HireVue’s AI, growing to more than 10M+ video interviews by May 20192 and then to 17.5M late 202034.

As adoption grew, HireVue framed its program as being backed by cognitive science and well governed: it touted AI Ethical Principles5 and highlighted the extent of their expert advisory board. They commissioned an external algorithmic audit, and published an AI Explainability Statement.6 The company’s January 12, 2021 release positioned these as industry firsts and tied them to ongoing fairness testing and transparency.7

In the same press release, HireVue explained that they had quietly removed the video signal from the AI assessment some months previously. Their CEO later described how it just “wasn’t worth the concern.”8

What the press release doesn’t mention is how evidence and expert concern had been mounting. A widely cited 2019 Psychological Science in the Public Interest review concluded “you generally cannot infer emotions or traits from faces without rich context”9. AI Now’s 2019 report10 urged banning affect recognition in high-stakes settings like hiring. Audits of facial-analysis tech around the time (e.g., Gender Shades11) documented sharp demographic error gaps. Policymakers began to respond, including Illinois’s AI Video Interview Act (2019) requiring notice, consent, and deletion12.

In November 2019, the advocacy group EPIC filed an FTC complaint, alleging unfair and deceptive practices and challenging HireVue’s use of facial analysis and its marketing of that capability.13

The pressure had become too great. HireVue had removed the video analysis, shifting emphasis to using language- and audio-based scoring instead. Is that any safer, any less prone to bias or inaccuracy? The reality is we don’t fully know, although the US EEOC and the US Department of Justice warn that the use of such techniques can lead to unlawful discrimination against people with different accents, speech difficulties or unusual styles of communication.14

Doing AI Governance is a newsletter by AI Career Pro about the real work of making AI safe, secure and lawful.

Please subscribe (for FREE) to our newsletter so we can keep you informed of new articles, resources and training available. Plus, you’ll join a global community of more than 4,000 AI Governance Pros learning and doing this work for real. Unsubscribe at any time.

The Complexity Problem: Governance Across the AI Lifecycle

The HireVue case reveals a fundamental challenge in AI governance: the need to manage evolving systems across their entire lifecycle, not just at deployment. HireVue didn’t fail to implement governance. It’s evident they had positive intent. They had ethical principles, advisory boards, external audits, and transparency statements. Yet their governance framework didn’t respond to the accumulation of scientific, regulatory, and reputational risks that ultimately forced a major architectural retreat.

This isn’t unusual. AI systems accumulate risk as they move from abstract ideas to operational systems that have effects on real people. Each stage in their development presents unique governance challenges, yet these stages are deeply interconnected. HireVue’s early decision to analyse facial movements was quite an impressive engineering capability in 2010 (Microsoft launched a similar experimental capability in 2015, and they took until 2022 to remove it, long after HireVue had responded15). HireVue built facial affect recognition into the core of their offering. That single architectural choice shaped their data collection requirements, model training approaches, validation protocols, and ultimately their regulatory exposure. When they removed facial analysis at some point in 2020, they didn’t just need to update a model. They would have had to reconsider their entire technical stack, retrain systems, retire systems, revalidate performance, and rebuild stakeholder trust.

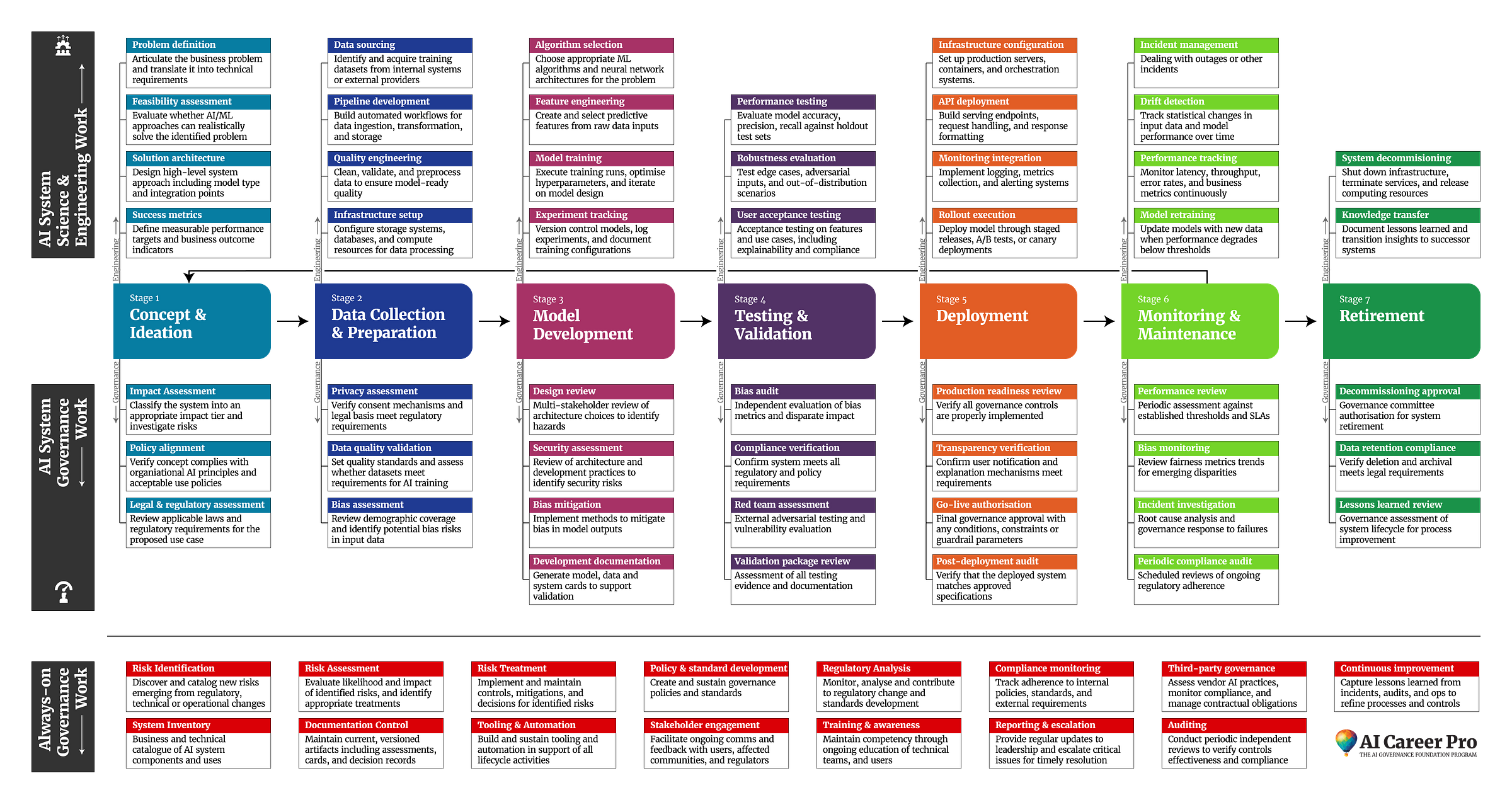

To understand this cascading effect of choices, you need to recognise the seven distinct stages through which AI systems evolve: ideation, design, data and model development, testing and validation, deployment, monitoring and operation, and finally retirement. At each stage, engineering work proceeds alongside governance activities, or even better the governance work is embedded into engineering activities. During ideation, while teams explore use cases and feasibility, governance sets up and reviews regulatory scans and risk assessments. Data and model development combines technical training work with bias assessment and privacy controls. Testing validates both performance metrics and fairness criteria. Deployment integrates technical rollout with compliance verification. Monitoring tracks both system performance and emerging risks. Even retirement requires both technical decommissioning and proper handling of data retention and stakeholder transitions.

These stages don’t exist in isolation. They’re supported by organisational governance mechanisms that operate continuously across the entire AI portfolio: comprehensive system inventories track what AI exists and where, enterprise risk management aggregates threats across systems, documentation control ensures knowledge persistence, policy frameworks provide consistent standards, and training programs build organisational capability. This layered approach creates what we might call “always-on” governance—a persistent organisational capability that supports individual systems while learning from the collective experience of the entire AI estate. The effectiveness of governance at any single stage depends not just on what happens within that stage, but on how well these organisational mechanisms connect and reinforce the work happening across all stages and systems. It’s a complex picture of enmeshed engineering and governance activities.

The AI System Lifecycle is anything but linear

But don’t get the wrong idea. This isn’t your old waterfall development model, it’s anything but. The AI lifecycle isn’t a neat sequence of independent phases. It’s a complex web of concurrent activities, iterative refinements, and feedback loops. Data scientists might be experimenting with new architectures while engineers deploy previous versions. Compliance teams assess risks of features still in development while product teams gather feedback that reshapes fundamental requirements. Your governance framework has to somehow accommodate this reality of parallel workstreams where any deployment might trigger a return to earlier stages. Try to force this into a waterfall and you destroy the pace of innovation, yet it’s still useful to conceptualise it this way first to understand interconnections, feed-forward and feedback loops.

Just think about what happens at each transition point. When a team moves from ideation to design, they’re making architectural decisions that will constrain every subsequent choice. The decision to use certain data sources determines privacy obligations. The choice of model architecture affects explainability requirements. The selection of performance metrics shapes what kinds of bias you can detect and mitigate. These aren’t independent technical decisions, they’re governance commitments that accumulate and compound.

The temporal dynamics add another layer of complexity. Models drift, data distributions shift, and what was acceptable yesterday becomes problematic tomorrow. HireVue’s facial analysis capabilities might have seemed innovative in 2014, but by 2018 the scientific consensus had shifted, regulatory frameworks had evolved, and public expectations had transformed. Static governance approaches that treat AI systems as fixed artifacts rather than evolving capabilities inevitably fail to address these dynamics.

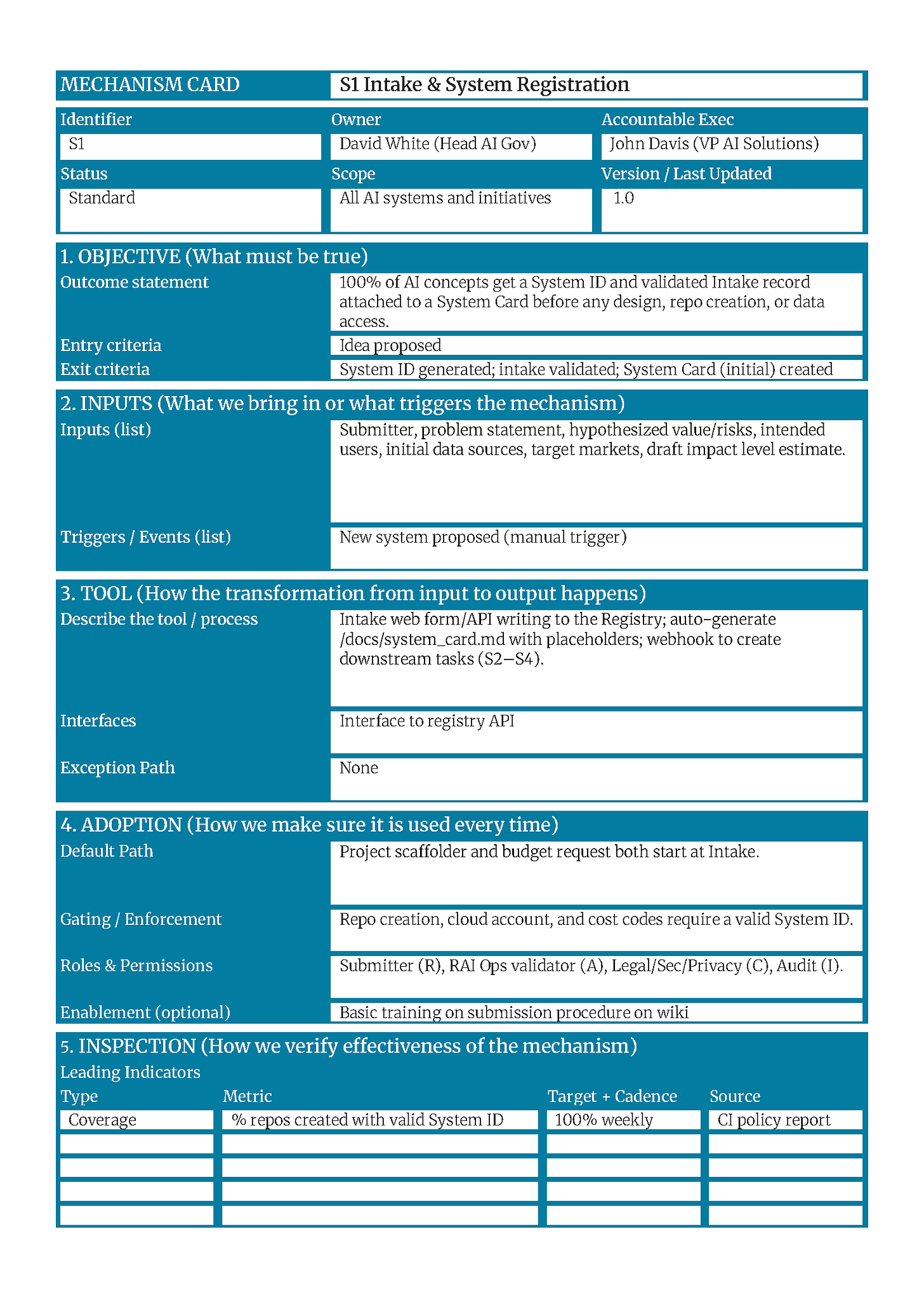

I teach the practices of AI Governance across the seven stages of the AI Lifecycle using Mechanism based frameworks in Course 3 of the AI Governance Practitioner Program at AI Career Pro.

Course 3 include 7 hours of video tuition on the governance activities at each stage of the AI lifecycle, 35 example mechanism cards and detailed practical guidance on how to apply this approach to real-world AI Governance at scale.

Cutting through the complexity: Mechanisms

This is where Jeff Bezos’s famous observation becomes salient: “Good intentions never work, you need good mechanisms to make anything happen.”

You see HireVue had good intentions. They had ethical principles, advisory boards and transparency commitments. They had governance structures and accountable individuals. They engaged reputable auditors to perform algorithmic bias assessments, and they share the report. But intentions don’t automatically translate into consistent or rapid action, especially when teams face competing pressures. It’s hard to imagine that HireVue was unaware of the mounting evidence against the effectiveness of video-based affect recognition, yet they delayed action.

At Amazon, where I was fortunate to lead AI assurance initiatives, we faced similarly complex challenges. We had dozens of product teams building AI capabilities, from document translation to large language model hosting. Each team moved fast, innovated independently, and had deep domain expertise. Publishing governance policies and either mandating or hoping for compliance simply wouldn’t work. We needed something fundamentally different to deal with the dynamics, complexity and distributed accountability.

But as any former or current employee of Amazon will tell you, Amazon’s operational excellence wasn’t built on policies. It runs on mechanisms. You might have heard of some of those mechanisms. The ‘two-pizza team structure' wasn’t a guideline, it was a mechanism with clear boundaries and accountability. The ‘six-page narrative’ for meetings wasn’t advice, it was a requirement with tooling, templates and very explicit requirements that made compliance automatic. Working backwards from press releases is most definitely not a suggestion, it’s embedded in how products get funded and approved. Within the AWS Well-Architected Framework, you might know that Amazon teaches customers how to use Mechanisms as the best approach to operational excellence16. And I’ve found they apply brilliantly to the complex challenges and dynamic nature of AI Governance.

Now at Amazon and throughout this approach that I apply and teach, a mechanism has a very precise meaning. It’s a closed-loop, adaptive system that consistently achieves a specific objective. Unlike policies that state intentions or procedures that merely prescribe steps, a mechanism builds desired behaviours into the structure of work itself. It takes defined inputs, transforms them through concrete tools and processes, and produces predictable outputs while continuously improving through feedback loops.

A policy might require teams to test their models for bias. It might even provide detailed instructions and metrics. But it still depends on teams remembering, caring, and executing correctly under pressure. A mechanism, by contrast, makes bias testing a mandatory gate in the deployment pipeline. It automatically runs standardised tests, blocks deployment until issues are resolved, and then improves its test suite based on what it learns from production incidents. The consistency comes not from human discipline but from system thinking and mechanism design.

The Anatomy of a Mechanism

Every effective mechanism operates through six essential components that work together as an atomic system:

Inputs and Outputs define what the mechanism transforms. Inputs are the controllable elements that flow into the mechanism: risk assessments, code commits, deployment requests, or model metadata. Outputs are the tangible artifacts it produces: completed model cards, audit logs, deployment approvals, or registry records. These outputs frequently become inputs to other mechanisms, creating an interconnected governance system. A model card produced by the documentation mechanism becomes input for the audit mechanism. A bias audit finding becomes an input for the model training mechanism.

A Tool sits at the heart of the mechanism, providing the concrete capability that transforms inputs into outputs. This isn’t a suggestion or guideline but actual software, automated workflows, document or systematic process that makes the desired behavior either automatic or mandatory. For AI governance, this might be a model card generator integrated into your training pipeline, an automated bias testing framework that runs within a CI/CD pipeline, or a risk assessment system that gates deployment decisions. Skilled people use the tool to achieve consistent execution.

Ownership creates accountability through a single-threaded owner whose success depends on the mechanism’s effectiveness. This person doesn’t just oversee the mechanism; they define it, drive adoption, measure impact, and continuously improve it. Without clear ownership, mechanisms decay into abandoned processes that teams work around. So the owner needs both authority to enforce the mechanism and accountability for its results. When the mechanism fails, it’s their phone that rings at 2am.

Adoption happens when the mechanism becomes embedded so deeply into workflows that using it is easier than avoiding it. The most effective adoption strategy is architectural: make the mechanism a required step in existing processes. Teams can’t deploy without completed model cards. They can’t access production data without privacy review. Code won’t merge without passing fairness tests. When the mechanism blocks progress until satisfied, adoption shifts from optional to inevitable.

Inspection provides continuous visibility into both usage and effectiveness. This goes beyond periodic auditing to include real-time metrics on whether the mechanism is being used (coverage metrics) and whether it’s achieving its goals (outcome metrics). A documentation mechanism tracks not only the existence of model cards but their completeness, currency, and actual usage during incidents. A bias testing mechanism measures test coverage, the diversity of test scenarios, and actions taken on failures.

Continuous Improvement distinguishes mechanisms from static processes. Every failure becomes a lesson, every workaround reveals a gap, every trending metric triggers adjustment. The mechanism is its own evolution process: how feedback gets collected, how changes are prioritised, how improvements get deployed. It’s this recursive quality that enables mechanisms to strengthen over time rather than decay into bureaucracy.

How Mechanisms Create System-Level Governance

The real power of mechanisms emerges when they connect into coherent systems of governance. Individual mechanisms solve specific problems, but connected mechanisms create comprehensive governance that scales across an organisation.

Take the documentation mechanism as a starting point. When a team trains a model, the mechanism automatically generates a model card pulling metadata from the training environment, performance metrics from validation runs, and architectural decisions from design documents. This model card becomes input to the inventory mechanism. The inventory mechanism uses risk scores to trigger audit mechanisms for high-impact systems. Audit findings feed into training mechanisms that update documentation templates and bias testing suites. Each mechanism strengthens the others.

This interconnection solves the scale problem that defeats policy-based governance. Instead of requiring hundreds of engineers to understand and correctly apply dozens of policies, you embed requirements into the tools they already use. The bias testing framework runs automatically in their development pipeline. Privacy checks integrate into the data access layer. Security scans execute on every code commit. Governance becomes part of the development process rather than an additional burden.

The system also handles the complexity of concurrent activities and iterative development that characterise AI work. While data scientists experiment with new architectures, the mechanisms capture their design decisions. As engineers deploy previous versions, the mechanisms enforce testing requirements. When compliance teams assess risks, they work from artifacts the mechanisms automatically generated. The governance system operates continuously across all these parallel activities without requiring centralised direction and enforcement.

The Path Forward

The HireVue story doesn’t end with their 2021 pivot away from facial analysis. It was just another inflection point where the company learned that governance isn’t something you can layer on top of AI systems. It’s something you build into them from the start, and have to actively sustain and develop. Their experience, multiplied across thousands of organisations who right now are building AI capabilities, points toward a fundamental truth: we just cannot govern AI through good intentions alone.

The complexity of AI systems, the speed of their evolution, and the scale of their impact demand governance approaches that operate as reliably as the systems themselves. I have found in practice that Mechanisms provide that reliability by helping to transform abstract principles into concrete systems, replacing human perfection with systematic execution (while retaining the expertise and skill of humans), and creating improvement loops that strengthen over time.

The path from good intentions to embedded governance isn’t easy, but it’s very achievable. But we have to stop asking “what should teams do?” and start asking “what mechanisms make sure they do it?” We need to stop measuring compliance with laws or policies or pretend that a compliance certification is anything more than a tiny glimpse at a point in time. Instead we need to start measuring the health of our governance mechanisms. Most importantly, we need to stop treating governance as something that happens to our AI teams and start building it as something with those engineering and data science teams to help them innovate safely at speed.

I think this is how we move from the cautionary tale of HireVue to a future where AI governance is as robust, scalable, and reliable as the AI systems it needs to govern. That’s high integrity AI governance.

Thank you as always for reading.

My special thanks if you are one of the 14,000 of my former colleagues affected by the Amazon layoffs this week. You know more than anyone how important humans are to the true effectiveness of mechanisms. Tools may be the engine, but they’re designed by people, guided by people and corrected by people. I’m sorry for the way Amazon has been blind to the inherent value of every individual to guide and govern safe, secure and lawful AI.

I teach the practices of AI Governance across the seven stages of the AI Lifecycle using Mechanism based frameworks in Course 3 of the AI Governance Foundation Program at AI Career Pro.

Course 3 include 7 hours of video tuition on the governance activities at each stage of the AI lifecycle, 35 example mechanism cards and detailed practical guidance on how to apply this approach to real-world AI Governance at scale.

Doing AI Governance is a newsletter by AI Career Pro about the real work of making AI safe, secure and lawful.

Please subscribe (for FREE) to our newsletter so we can keep you informed of new articles, resources and training available. Plus, you’ll join a global community of more than 3,900 AI Governance Pros learning and doing this work for real. Unsubscribe at any time.

https://www.washingtonpost.com/technology/2019/10/22/ai-hiring-face-scanning-algorithm-increasingly-decides-whether-you-deserve-job/

https://contentenginellc.com/2019/05/21/hirevue-surpasses-ten-million-video-interviews-completed-worldwide/

https://www.globenewswire.com/news-release/2020/10/29/2116850/0/en/HireVue-Records-Surge-in-Anytime-Anywhere-Candidate-Engagement.html

Note that not every interview used AI scoring, but Hirevue have not disclosed how many did.

https://legal.hirevue.com/product-documentation/ai-ethical-principles

https://www.hirevue.com/wp-content/uploads/2025/10/HV_2025_AI-Explainability-Statement.pdf

https://www.globenewswire.com/news-release/2021/01/12/2157356/0/en/HireVue-Leads-the-Industry-with-Commitment-to-Transparent-and-Ethical-Use-of-AI-in-Hiring.html

https://www.wired.com/story/job-screening-service-halts-facial-analysis-applicants/

https://www.psychologicalscience.org/publications/emotional-expressions-reconsidered-challenges-to-inferring-emotion-from-human-facial-movements.html

https://ainowinstitute.org/wp-content/uploads/2023/04/AI_Now_2019_Report.pdf

https://proceedings.mlr.press/v81/buolamwini18a.html

https://www.ilga.gov/Legislation/publicacts/view/101-0260

https://epic.org/documents/in-re-hirevue/

https://www.eeoc.gov/newsroom/us-eeoc-and-us-department-justice-warn-against-disability-discrimination

https://azure.microsoft.com/en-us/blog/responsible-ai-investments-and-safeguards-for-facial-recognition

https://docs.aws.amazon.com/wellarchitected/latest/operational-readiness-reviews/building-mechanisms.html

Regarding the topic of the article, I truly appreciated your hightlighting that HireVue's assessments were "not one backed by any real science." It's crucial to scrutinize these AI applications, especially when they impact people's lives and career prospects. This really underscores the challenges in ethical AI development.